Even experienced C/C++ programmers often mix-up the terms integer overflow and wrap-around. Likewise, they are confused about the ramifications. This post attempts to clear things up a little.

Even experienced C/C++ programmers often mix-up the terms integer overflow and wrap-around. Likewise, they are confused about the ramifications. This post attempts to clear things up a little.

OVERFLOW AND UNDERFLOW

In C (and C++), integer types (like the signed and unsigned versions of char, short, and int) have a fixed bit-size. Due to this fact, integer types can only support certain value ranges. For unsigned int, this range is 0 to UINT_MAX, for (signed) int INT_MIN to INT_MAX. On a typical platform, these constants have the following values:

|

1 2 3 4 5 |

UINT_MAX +2^32 (+4294967296) INT_MIN -2^31 (-2147483648) INT_MAX +2^31 - 1 (+2147483647) |

The actual values depend on many factors, for instance, the native word size of a platform and whether 2’s complement represantation is used for negative values (which is almost universally the case), consult your compiler’s limits.h header file for details.

Overflow happens when an expression’s value is larger than the largest value supported by a type; conversely, underflow occurs if an expression yields a value that it is smaller than the smallest value representable by a type. For instance:

|

1 2 3 4 5 6 |

unsigned int ui = UINT_MAX; ++ui; // Overflow. ui = 0; --ui; // Underflow. |

It’s common among programmers to use the term overflow for both, overrun and underrun of a type’s value range. And so shall I, for the rest of this discussion.

WRAP-AROUND

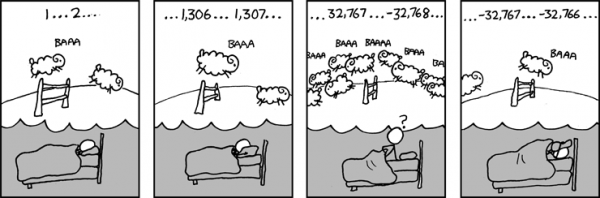

Now that we know what overflow is, we can tackle the question what happens on overflow. One possibility is what is conventionally referred to as wrap-around. Wrap-around denotes that an integer type behaves like a circle; that is, it has no beginning and no end. If you add one to the largest value, you arrive at the smallest; if you subtract one from the smallest value, you get the largest.

Wrap-around is, however, only one way to handle integer overflow. Other possibilities exist, ranging from saturation (the overflowing value is set to the largest/smallest value and stays there), to raising an exception, to doing whatever an implementation fancies.

AND THE C LANGUAGE SAYS…

If you want to find out how C (and C++) handles integer overflow, you have to take a look at chapter 6.7.5 “Types”, the following sentence in particular:

“A computation involving unsigned operands can never overflow, because a result that cannot be represented by the resulting unsigned integer type is reduced modulo the number that is one greater than the largest value that can be represented by the resulting type”

Which means in plain English:

0. Apparently, there is overflow (as defined above) and C-overflow (as used by the standard). C-overflow is more like an error condition that occurs if overflow behavior is not defined for a type.

1. Unsigned integer types wrap-around on overflow, “reduced modulo the number that is one greater than the largest value” is just a fancy name for it. Thus, unsigned integer overflow is well defined and not called overflow by the language standard.

2. Nothing is explicitly said about signed integer types. There are, however, various hints in the standard that signed integer overflow is undefined, for instance:

3.4.3 Undefined Behavior: An example of undefined behavior is the behavior on integer overflow.

J.2 Undefined behavior: The value of the result of an integer arithmetic or conversion function cannot be represented.

To sum it up: on overflow, unsigned integers wrap-around whereas signed integers “overflow” into the realm of undefined behavior (contrary to Java and C#, BTW, where signed integers are guaranteed to wrap around).

SIGNED OVERFLOW

You might have believed (and observed) that in C signed integers also wrap around. For instance, these asserts will hold on many platforms:

|

1 2 3 4 5 |

int i = INT_MIN; assert(--i == INT_MAX); assert(++i == INT_MIN); |

Both asserts hold when I compiled this code on my machine with gcc 7.4; the following only if optimizations are disabled (-O0):

|

1 2 3 4 |

i = INT_MAX; assert(i + 42 < i); |

From -O2 on, gcc enables the option -fstrict-overflow, which means that it assumes that signed integer expressions cannot overflow. Thus, the expression i + 42 < i is considered false, regardless of the value i. You can control signed integer overflow in gcc, check out the options -fstrict-overflow, -fwrapv, and -ftrapv. For maximum portability, however, you should always stay clear of signed integer overflow and never assume wrap-around.

SIGNED OVERFLOW THAT ISN’T

What about this code? Does this summon up undefined behavior, too? Doesn’t the resulting sum overflow the value range of the short type?

|

1 2 3 4 5 |

short x = SHRT_MAX; short y = SHRT_MAX; short sum = x + y; |

The short (pun intended!) answer is: it depends!

It depends because before adding x and y, a conforming C compiler promotes both operands to int. Thus, to the compiler, the code looks like this:

|

1 2 3 4 |

int isum = (int)x + (int)y; short sum = (short)(isum); |

Adding two integers that hold a value of SHRT_MAX doesn’t overflow, unless — and that’s why it depends — you are hacking away on an ancient 16-bit platform where sizeof(short) == sizeof(int).

But even on a typical 32- or 64-bit platform, what about the assignment of the large integer result to the short variable sum. This surely overflows, doesn’t it? Doesn’t this yield undefined behavior? The answer in this case is a clear ‘no’. It’s rather ‘implementation specified’. Let’s see.

SIGNED INTEGER TYPE CONVERSIONS

In the previous example, a larger signed type is converted into a smaller signed type. This is what the C99 standard has to say about it:

6.3.1.3 Signed and unsigned integers

When a value with integer type is converted to another integer type other than _Bool, if the value can be represented by the new type, it is unchanged.

Otherwise, if the new type is unsigned […]

Otherwise, the new type is signed and the value cannot be represented in it; either the result is implementation-defined or an implementation-defined signal is raised.

What an implementation will choose to do, in practice, is wrap-around.

|

1 2 3 4 5 |

int i = SHRT_MAX + 1; short s = i; assert(s == SHRT_MIN); |

Why does a compiler behaves like this? You can find an explanation by Linus Torvalds himself:

“Bit-for-bit copy of a 2’s complement value. Anything else would be basically impossible for an optimizing compiler to do unless it actively _tried_ to screw the user over.”

To sum it up:

1. Unsigned integers wrap around on overflow. 100 percent guaranteed.

2. Signed integer overflow means undefined behavior. Don’t rely on wrap-around.

3. Type conversions to smaller signed types will very likely wrap-around. Let’s call this “Torvalds-defined behavior”.

It’s a given fact of life that something that’s deemed totally safe in one environment may be totally unsafe in another. Every German who has ever used an American sauna knows

It’s a given fact of life that something that’s deemed totally safe in one environment may be totally unsafe in another. Every German who has ever used an American sauna knows