“The fishermen know that the sea is dangerous and the storm terrible, but they have never found these dangers sufficient reason for remaining ashore.”

— Vincent Van Gogh

Last December, I had a lot of fun working on “Linux Magazin’s” programming contest. I was both, surprised and excited when the editors contacted me in January and told me that I got second place and would even receive a price! But when I found out about the details, my initial joy quickly subsided—partly because of them, but much more because of me. Let met explain.

THE CHALLENGE

Since “Linux Magazin” is only available in German, I will briefly summarize what the competition was all about.

Imagine there’s a fisherman (or fisherwoman, or fisherperson, whatever you prefer) sitting in a boat on a pond. The pond is rectangular in shape and divided into M x N squares. The initial position of the boat is the square at column 0, row 0. Thus, using x and y as column and row coordinates, the boat’s initial position corresponds to x = 0 and y = 0. The fisherman can steer the boat through the pond by incrementing (or decrementing) either x or y by one with every move he makes.

Each of the squares in the pond may contain a fish. The aim is to write a Python script that picks up all the fish using a minimum number of moves.

Here’s an example of a 7 x 5 pond with the boat ‘B’ at the initial position and three fish, denoted by asterisks:

|

|

0 1 2 3 4 5 6 ┌───┬───┬───┬───┬───┬───┬───┐ 0 │ B │ │ │ │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 1 │ │ │ │ * │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 2 │ │ * │ │ │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 3 │ │ │ │ │ * │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 4 │ │ │ │ │ │ │ │ └───┴───┴───┴───┴───┴───┴───┘ |

One straightforward solution is to scan the whole pond, column by column, row by row:

|

|

for row in range(N): for col in range(M): moves += 1 if pond[row][col] == FISH_PRESENT: fish_count += 1 pond[row][col] == EMPTY |

Obviously, this exhaustive scan wouldn’t win you a prize as it requires too many moves (M multiplied by N, to be precise).

GO FOR GOLD, ER, FISH

After scratching their heads for a while, most developers will realize that this is a variant of the traveling salesman (or saleswoman, or salesperson, whatever you prefer) problem. The difference is that the fisherman, unlike the traveling salesman, doesn’t have to return to his origin after having visited all other nodes [1].

After some more head-scratching, some developers will even remember that this is an NP-complete problem and finding the optimal solution (by trying out all possible routes) requires time proportional to O(n!), where n is the number of fish in the pond.

Due to the exponential nature of this problem, a better (even if not optimal) idea is needed. A reasonable choice to start with is the “next nearest neighbor” strategy, which always visits the node (fish) that is closest to the current position next, and so on. This is what I tried out first, it required only 30 lines of code and allowed me to implement some initial unit tests [2].

Most developers have learned the hard way that it’s essential to find out about the typical use-cases before optimizing a system. Otherwise, you will end up with what is referred-to as “fast slow code”. Unfortunately, there are no boundaries in the pond problem: the pond could consist of millions of squares and there could be millions of fish. How can you differentiate yourself from all the other contestants who will most likely also employ a nearest neighbor search?

IMPROVEMENTS

I wasn’t satisfied with my nearest neighbor approach. All kinds of problems and corner cases came to my mind. Consider the following pond set-up:

|

|

0 1 2 3 4 5 6 ┌───┬───┬───┬───┬───┬───┬───┐ 0 │ B │ * │ * │ * │ * │ * │ * │ ├───┼───┼───┼───┼───┼───┼───┤ 1 │ │ │ │ │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 2 │ * │ │ │ │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 3 │ │ │ │ │ │ │ │ ├───┼───┼───┼───┼───┼───┼───┤ 4 │ │ │ │ │ │ │ │ └───┴───┴───┴───┴───┴───┴───┘ |

According to the nearest neighbor strategy, the fisherman would first visit x = 1, y = 0 and then process the whole first row by repeatedly moving one square to the right. After reaching x = 6, y = 0, he would move back all the way to x = 0, y = 2. What a waste!

Even though I knew about this problem’s exponential nature, I though about doing an exhaustive O(n!) search at least for smaller ponds with little fish. This approach would yield the optimal solution (which would start with picking up the fish at x = 0, y = 2 first, in the example above). When the problem size crossed a certain threshold, I would fall back to the nearest neighbor strategy.

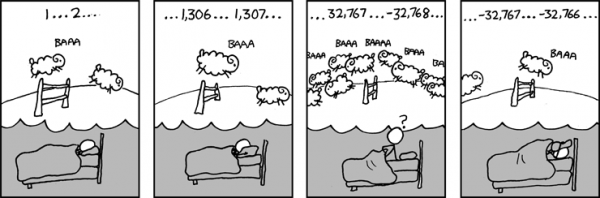

Alas, my measurements showed discouraging results. It took only a couple of seconds to solve ponds with up to ten fish. However, it took hours to find the optimal solution if there were more than 15 fish in the pond [3]. Since I assumed that the ponds against which the submissions are tested were large and contained lots of fish, I ditched this idea immediately.

Then followed a period of days of frustration, with many dead-end experiments. Two days before the submission date, I decided to try out one final idea. I knew from my previous testing that visiting all permutations was not feasible. But what I could do instead of always aiming towards the closest square containing a fish with my initial move, I could try out all cells containing fish as the initial target square and use the nearest neighbor strategy only from this square onward. Of all the experiments, I would pick the route that required the least number of moves.

This was easy to implement and increased the overall run-time only by time proportional to n, the total number of fish. Even though this approach wasn’t able to always find the optimal route, it solved my degenerate pond example nicely, even for large N, M, and n, so I submitted it.

AND THE WINNER IS… NOT ME!

When the editors contacted me about a month later, I couldn’t wait to find out why I didn’t win, what the actual test data was and so on. What I saw, however, was quite frustrating: neither of the 5 ponds they used on the submitted scripts were large or complex. In fact, all of them were laid-out in such a way that a simple nearest neighbor search would yield optimal solutions, so the improvement that I just explained to you had no effect, whatsoever. When I fed my degenerate pond to the winner’s script, it required 14 moves, while mine only needed 10.

But I lost anyway. I lost, because one pond was set up such that at some point, there where multiple, equally near fish to choose from. While my naive implementation simply picked the first one, the winning algorithm explored all choices and selected the one that resulted in the shortest overall path, which is not only smart, but also easy to implement.

LESSONS LEARNED

There’s no point in sugar-coating it: I was quite disappointed. Why were the ponds so small and simple? I could have used the sluggish O(n!) search for almost all of the ponds and won easily. But I also beat myself up for not even thinking about the possibility of multiple nearest neighbors, which would have naturally led to the improvement done by the winner.

A couple of days later, I finally got over it. After all, I came in second, had a lot of fun, and something to post about. But the biggest benefit is the valuable lesson that I (re-)learned: when working on optimization problems, assume nothing!

I assumed that the ponds where big, that’s why I didn’t include the O(n!) search, even though I had already implemented it. I assumed that the ponds where obscenely complex, which led me to implement the improvement mentioned above. I was so focused on tricky ponds that the problem of simple ponds with multiple nearest neighbors didn’t even occur to me—that’s target fixation at it’s best (or worst, if you prefer).

Once again, the journey was the real reward. What I got for a prize, you wonder? A 500+ pages “Java for Beginners” tome, in German. ‘Nuff said!

________________________________

[1] Computer scientists would call this a Hamiltonian path with a fixed start point, but no fixed end point.

[2] Why unit tests for a programming contest? Because a fast solution that is incorrect is worth nothing! Some of my first tests revealed that a fish at the initial boat position (x = 0, y = 0) wasn’t picked up. Another test showed that I forgot to update the pond after picking up the fish, which obviously makes a difference when you come across a cells containing a fish twice. Unit tests are always necessary and always good.

[3] Python + O(n!) == no-no

I’ve finally found time and motivation to resume work on the “Happy Path to Modern C++” project. I’m sure it now contains enough code snippets to give you a good overview of C++11. As a matter of fact, I can’t think of an easier way to learn C++11, provided you’re already well-versed in C++ and C++98, in particular.

I’ve finally found time and motivation to resume work on the “Happy Path to Modern C++” project. I’m sure it now contains enough code snippets to give you a good overview of C++11. As a matter of fact, I can’t think of an easier way to learn C++11, provided you’re already well-versed in C++ and C++98, in particular.